Will artificial intelligence ever rival true human thinking? | ABS-CBN

ADVERTISEMENT

Welcome, Kapamilya! We use cookies to improve your browsing experience. Continuing to use this site means you agree to our use of cookies. Tell me more!

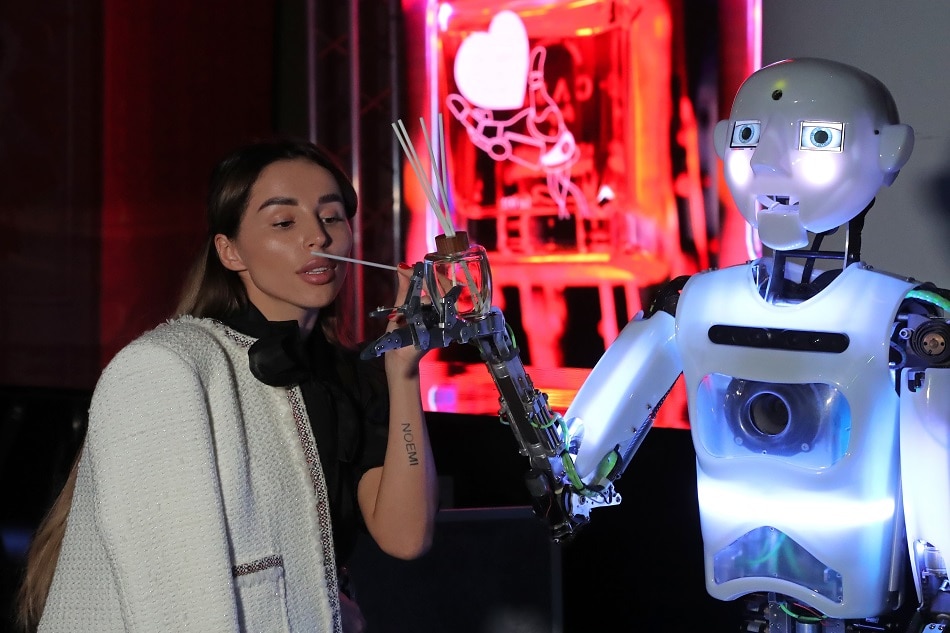

Will artificial intelligence ever rival true human thinking?

Will artificial intelligence ever rival true human thinking?

Deutsche Welle

Published Oct 20, 2022 04:36 AM PHT

Some of the world’s most advanced artificial intelligence (AI) systems, at least the ones the public hears about, are famous for beating human players at chess or poker. Other algorithms are known for their ability to learn how to recognize cats or their inability to recognize people with darker skin.

Some of the world’s most advanced artificial intelligence (AI) systems, at least the ones the public hears about, are famous for beating human players at chess or poker. Other algorithms are known for their ability to learn how to recognize cats or their inability to recognize people with darker skin.

But are current AI systems anything more than toys? Sure, their ability to play games or identify animals is impressive, but does this help in creating useful AI systems? To answer this, we need to take a step back and question what the goals of AI are.

But are current AI systems anything more than toys? Sure, their ability to play games or identify animals is impressive, but does this help in creating useful AI systems? To answer this, we need to take a step back and question what the goals of AI are.

AI tries to predict the future by analyzing the past

The fundamental idea behind AI is simple: To analyze patterns from the past to make accurate predictions about the future.

The fundamental idea behind AI is simple: To analyze patterns from the past to make accurate predictions about the future.

This idea underlies every algorithm, from Google showing you adverts of what it predicts you want to buy, to predict whether an image of a face is you or your neighbor. AI is also being used to predict whether patients have cancer or not by analyzing medical records and scans.

This idea underlies every algorithm, from Google showing you adverts of what it predicts you want to buy, to predict whether an image of a face is you or your neighbor. AI is also being used to predict whether patients have cancer or not by analyzing medical records and scans.

ADVERTISEMENT

Pluribus, the poker-playing bot, was able to beat the world’s top poker players in 2019 by being able to predict it could out-bluff the humans.

Pluribus, the poker-playing bot, was able to beat the world’s top poker players in 2019 by being able to predict it could out-bluff the humans.

Making predictions requires incredible amounts of data and the power to process it quickly. Pluribus, for example, filters data from billions of card games in a matter of milliseconds. It stitches patterns together to predict the best possible hand to play, always looking back at its data history to achieve the task at hand, never wondering what it means to look forward.

Making predictions requires incredible amounts of data and the power to process it quickly. Pluribus, for example, filters data from billions of card games in a matter of milliseconds. It stitches patterns together to predict the best possible hand to play, always looking back at its data history to achieve the task at hand, never wondering what it means to look forward.

Pluribus, AlphaGo, Amazon Rekognition ― there are many algorithms out there that are incredibly effective at their job, some so good they can beat human experts.

Pluribus, AlphaGo, Amazon Rekognition ― there are many algorithms out there that are incredibly effective at their job, some so good they can beat human experts.

All these examples are proof of how powerful AI can be at making predictions. The question is which task you want it to be good at.

All these examples are proof of how powerful AI can be at making predictions. The question is which task you want it to be good at.

Human intelligence is general, artificial intelligence narrow

AI systems can really only do one task. Pluribus, for example, is so task-specific that it can’t even play another card game like blackjack, let alone drive a car or plan world domination.

AI systems can really only do one task. Pluribus, for example, is so task-specific that it can’t even play another card game like blackjack, let alone drive a car or plan world domination.

ADVERTISEMENT

This is very much unlike human intelligence. One of our key features is that we can generalize. We become highly skilled at different skills throughout life ̶― learning everything from how to walk, how to play card games or how to write articles. We might specialize in a few of those skills, even making a career out of some, but we’re still capable of learning and performing other tasks in our lives.

This is very much unlike human intelligence. One of our key features is that we can generalize. We become highly skilled at different skills throughout life ̶― learning everything from how to walk, how to play card games or how to write articles. We might specialize in a few of those skills, even making a career out of some, but we’re still capable of learning and performing other tasks in our lives.

What’s more, we can also transfer skills, using knowledge of one thing to acquire skills in another. AI systems fundamentally don’t work this way. They learn through endless repetition, or at least until the energy bill gets too high, improving prediction accuracy through trillions of iterations and sheer weight of calculations.

What’s more, we can also transfer skills, using knowledge of one thing to acquire skills in another. AI systems fundamentally don’t work this way. They learn through endless repetition, or at least until the energy bill gets too high, improving prediction accuracy through trillions of iterations and sheer weight of calculations.

If developers want AI to be as versatile as human intelligence, then AI needs to start being able to have more generalizable and transferable intelligence.

If developers want AI to be as versatile as human intelligence, then AI needs to start being able to have more generalizable and transferable intelligence.

Artificial general intelligence

And the narrowness of AI is changing. What’s set to revolutionize computing is artificial general intelligence (AGI). Much like humans, AGIs will be able to do several tasks at once, each one of them at an expert level.

And the narrowness of AI is changing. What’s set to revolutionize computing is artificial general intelligence (AGI). Much like humans, AGIs will be able to do several tasks at once, each one of them at an expert level.

AGIs like this hasn’t been developed yet, but according to Irina Higgins, a research scientist at Google subsidiary DeepMind, we’re not far off.

AGIs like this hasn’t been developed yet, but according to Irina Higgins, a research scientist at Google subsidiary DeepMind, we’re not far off.

ADVERTISEMENT

ʺ10-15 years ago people thought AGI was a crazy pipe dream. They thought it was 1,500 years away, maybe never. But it’s happening in our lifetime,ʺ Higgins told DW.

ʺ10-15 years ago people thought AGI was a crazy pipe dream. They thought it was 1,500 years away, maybe never. But it’s happening in our lifetime,ʺ Higgins told DW.

The modest plans are to use AGI to help us answer the really big problems in science, like space exploration or curing cancer.

The modest plans are to use AGI to help us answer the really big problems in science, like space exploration or curing cancer.

But the more you read about the potential of AGI, the more the narrative becomes more science fiction than science ̶ think silicon, plastic and metal beings calling themselves humans or super-computers running city-wide bureaucracies.

But the more you read about the potential of AGI, the more the narrative becomes more science fiction than science ̶ think silicon, plastic and metal beings calling themselves humans or super-computers running city-wide bureaucracies.

Transformative AI is broadening artificial intelligence

While AGI leans more towards science fiction, developments in the field of transformative AI belong firmly in the nonfiction category.

While AGI leans more towards science fiction, developments in the field of transformative AI belong firmly in the nonfiction category.

"Even though AI is very, very task-specific, people are broadening the tasks a computer can do," Eng Lim Goh, Chief Technology Officer at Hewlett Packard Enterprise, told DW.

"Even though AI is very, very task-specific, people are broadening the tasks a computer can do," Eng Lim Goh, Chief Technology Officer at Hewlett Packard Enterprise, told DW.

ADVERTISEMENT

One of the first transformative AI systems already in use is Large Language Models (LLMs).

One of the first transformative AI systems already in use is Large Language Models (LLMs).

"LLMs started by autocorrecting misspelled words in texts. Then they were trained to autocomplete sentences. And now, because they’ve processed so much text data, they can have a conversation with you," he said, referring to chatbots.

"LLMs started by autocorrecting misspelled words in texts. Then they were trained to autocomplete sentences. And now, because they’ve processed so much text data, they can have a conversation with you," he said, referring to chatbots.

The capabilities of LLMs have been broadened further from there. Now the systems are able to provide responses not just to text but also to images.

The capabilities of LLMs have been broadened further from there. Now the systems are able to provide responses not just to text but also to images.

"But keep in mind that these systems are still very narrow when you compare it to someone’s job. LLMs can’t understand human meaning of texts and images. They can’t creatively use texts and images like humans can," Goh said.

"But keep in mind that these systems are still very narrow when you compare it to someone’s job. LLMs can’t understand human meaning of texts and images. They can’t creatively use texts and images like humans can," Goh said.

Some readers’ minds might now be wandering to AI ‘art’ – algorithms like DALL-E 2 that generate images based on input texts.

Some readers’ minds might now be wandering to AI ‘art’ – algorithms like DALL-E 2 that generate images based on input texts.

ADVERTISEMENT

But is this art? Is this evidence that machines can create? It’s open for philosophical debate, but according to many observers, AI does not create art but merely imitates it.

But is this art? Is this evidence that machines can create? It’s open for philosophical debate, but according to many observers, AI does not create art but merely imitates it.

To misquote Ludwig Wittgenstein, "my words have meaning, your AI’s do not."

To misquote Ludwig Wittgenstein, "my words have meaning, your AI’s do not."

Edited by: Carla Bleiker

Read More:

artificial intelligence

AI

human thinking

artificial intelligence systems

AI systems

algorithms

Pluribus

AlphaGo

Amazon Rekognition

ADVERTISEMENT

ADVERTISEMENT